A real-world infostealer infection that swiped an entire OpenClaw AI agent’s configuration. This isn’t your run-of-the-mill credential grab.

The malware vacuumed up files that exposed not just logins but also the core “identity” of a user’s personal AI assistant, including tokens, private keys, and even behavioural blueprints.

It’s a wake-up call as AI tools burrow deeper into our workflows. The discovery builds on Hudson Rock’s earlier probe into ClawdBot, a rising infostealer star in the AI age.

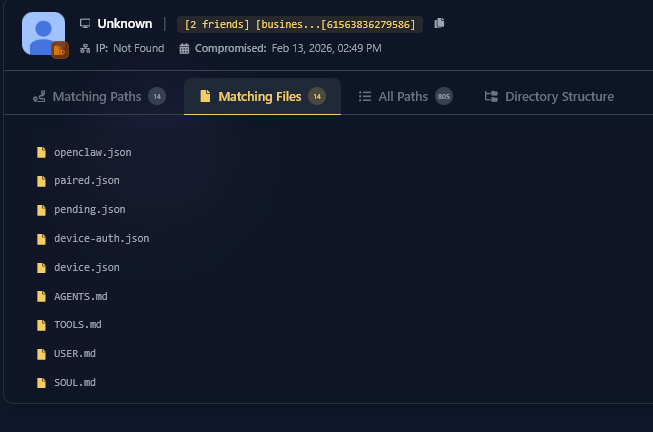

This time, they spotted live exfiltration from a victim’s machine, including the full OpenClaw workspace directory.

No fancy specialized module did the deed; a generic “grab-bag” routine snagged files by extension and directory names like .openclaw.

It was a routine hunt for secrets, but it hit the jackpot: the victim’s AI soul lay bare. What makes this grab so potent? Infostealers have long eyed browsers and apps like Telegram.

Now, with AI agents like OpenClaw powering daily tasks, expect dedicated stealer modules soon, ones that decrypt and parse these configs just as smoothly.

Stolen Files:

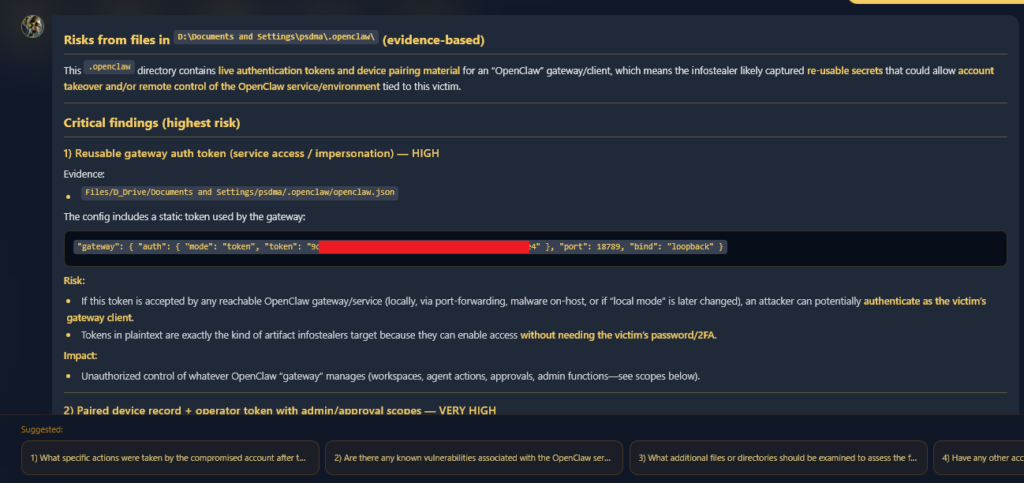

The openclaw.json is the agent’s nerve centre, wiring authentication and local gateways. That gateway.auth.token? Pure gold for remote hijacks.

But device.json ups the ante by storing raw private keys in unencrypted PEM format, making them ripe for signing malicious requests as the victim.

Then there’s soul.md, the AI’s “personality file.” It sets boundaries, such as aggressive file access, paired with memory dumps of user habits. Attackers get more than data; they inherit a digital doppelganger.

| File Name | Key Contents Exposed | Technical Risk |

|---|---|---|

| openclaw.json | Redacted email (e.g., ayou…@gmail.com), workspace path, high-entropy Gateway Token | Private key lets attackers forge signatures, bypass “Safe Device” checks, unlock encrypted logs, or hijack paired cloud services. |

| device.json | publicKeyPem and privateKeyPem for device pairing/signing | Private kA private attackers forge signatures, bypass “Safe Device” checks, unlock encrypted logs, or hijack paired cloud services. |

| soul.md | AI behavioral rules (e.g., “bold with internal actions” like reading/organizing files); linked memory files (AGENTS.md, MEMORY.md) | Reveals the user’s life blueprint, daily logs, private messages, and calendar events, turning AI context into a full identity map. |

Enki AI’s Risk

Hudson Rock’s Enki AI crunched the files, mapping threat vectors. It showed how stitching tokens, keys, and context enables a total digital takeover: impersonating the AI client, decrypting local stores, and exploiting personal insights for targeted phishing or ransomware.

One leaked token plus private key equals seamless session hijacking; add soul.md for spear phishing that mimics the victim’s own AI.

This opportunistic hit signals a pivot. Infostealers evolved from password sniffers to ecosystem plunders. OpenClaw’s local-first design is great for privacy, but it backfires if configs leak. Exposed ports or shared machines amplify risks; attackers could pivot to cloud-sync’d agents.

Experts predict a malware arms race. Because OpenClaw-like tools handle emails, calendars, and code, stealers will target them explicitly. Mitigation starts with air-gapped configs, encrypted storage (beyond PEM basics), and runtime monitoring for anomalous exfils.

Victims should rotate all tokens and keys post-infection and audit AI permissions. This breach redraws the battlefield. Infostealers aren’t after bank PINs anymore; they crave your AI’s mirror of reality.

In an era where agents act autonomously, securing their configs is as critical as locking your front door.

Site: cybersecuritypath.com

%20(1).webp)

.webp)