On February 20, 2026, Cloudflare suffered a major outage lasting over six hours, affecting thousands of websites and services worldwide due to unintended BGP route withdrawals for BYOIP customers.

Although not a cyberattack, the incident exposed critical vulnerabilities in network configuration management and API handling, raising alarms in cybersecurity circles about infrastructure resilience.

The outage began at 17:48 UTC when a Cloudflare configuration change in the BYOIP pipeline triggered the withdrawal of approximately 1,100 IP prefixes via Border Gateway Protocol (BGP).

BYOIP allows customers to use their own IP addresses routed through Cloudflare’s network, but the change caused routers to stop advertising these prefixes globally, rendering services unreachable.

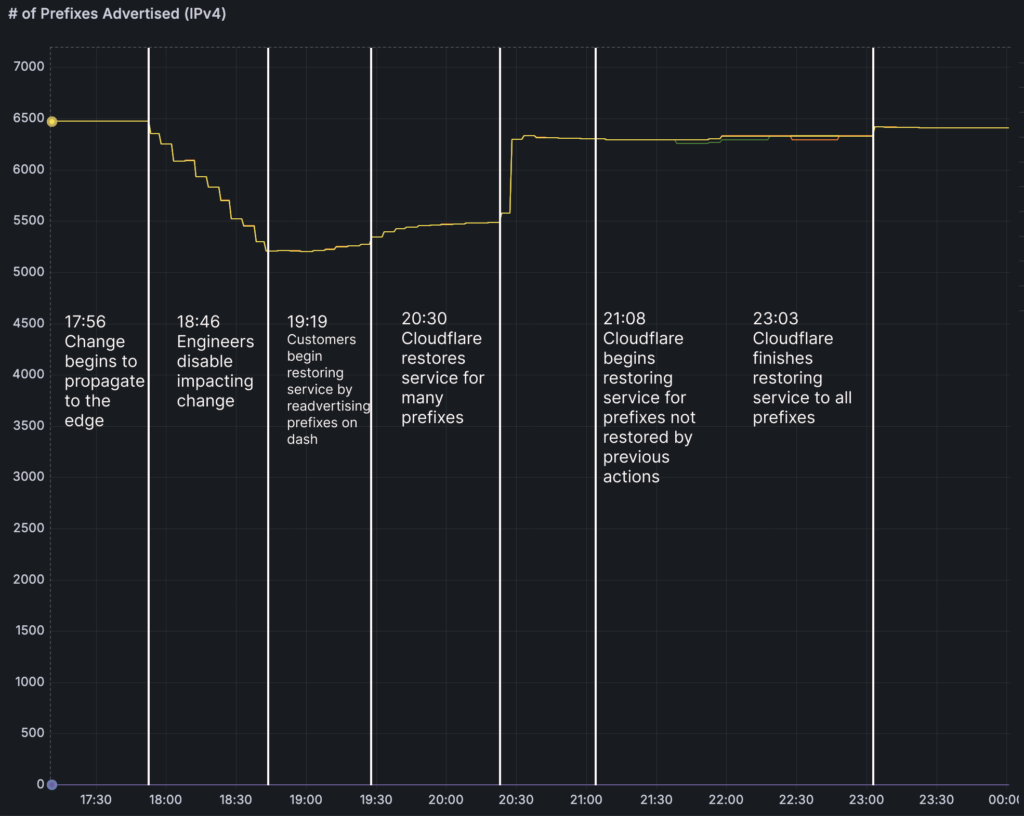

Impact peaked between 17:56 and 18:46 UTC, with 25% of 4,306 BYOIP prefixes (out of 6,500 total) affected, leading to connection timeouts and BGP path hunting where packets loop endlessly seeking invalid routes.

Full resolution took 6 hours and 7 minutes, ending at 23:03 UTC after manual restorations. Cloudflare’s 1.1.1.1 website showed 403 “Edge IP Restricted” errors, though DNS resolution remained operational. The iterative rollout limited scope, sparing some customers, but highlighted risks in propagating changes to edge servers instantly.

A buggy cleanup sub-task in the Addressing API, Cloudflare’s authoritative IP database, was the culprit. Intended to automate prefix deletions for customer requests, the task issued a flawed API call: GET /v1/prefixes?pending_delete without a value parameter.

Server-side logic checked req.URL.Query().Get("pending_delete"), returning an empty string instead of triggering the pending deletions query, fetching all prefixes instead.

This queued all BYOIP prefixes and their service bindings for deletion, propagating withdrawals across the network. Staging tests failed to catch it due to insufficient mock data and incomplete coverage for autonomous task-runner scenarios. Code merged on February 5 but deployed on the incident day, bypassing fuller safeguards.

Impacts spanned multiple products reliant on BYOIP advertisements:

Ripple effects hit dependents like Laravel Cloud, down for 3+ hours. Users saw latency spikes during recovery as bindings repopulated edge servers.

Prefixes fell into three states: withdrawn only (self-fix via dashboard), partial bindings removed, or fully unbound (requiring global config pushes). About 800 restored by 20:20 UTC; 300 needed manual database recovery. Absent “Code Orange: Fail Small” snapshots, rollbacks relied on snapshots and propagation delays.

Timeline highlights rapid response post-detection at 18:46 UTC:

| Time (UTC) | Event |

|---|---|

| 17:56 | Impact starts; withdrawals propagate. |

| 18:46 | Bug identified; sub-task halted. |

| 19:19 | Dashboard self-mitigation advised. |

| 23:03 | Full restoration complete. |

While Cloudflare insists no malicious activity, the bug mimics BGP hijacking tactics used in attacks like state-sponsored route leaks. Accidental mass withdrawals underscore BGP’s fragility, a perennial cybersecurity vector; prefixes unadvertised enable DoS-like effects without exploits. API query flaws highlight parameter tampering risks, akin to injection vulnerabilities if unvalidated.

In a zero-trust era, this erodes confidence in CDN providers handling BGP for critical infra. No data breaches occurred, but downtime amplified threats: unmitigated sites vulnerable to opportunistic attacks during blackouts.

Cloudflare ties fixes to “Code Orange: Fail Small,” mandating health-gated rollouts, dependency-free break-glass procedures, and failure-mode testing. Key steps include API schema standardization for flags, operational/config state separation with snapshots, and circuit breakers for bulk withdrawals.

Monitoring will halt rapid BGP changes; customer service health feeds breakers. These aim for instant per-customer rollbacks, prioritizing stability over API immediacy. Industry watchers praise transparency but urge independent audits for BGP controls.

Site: cybersecuriytpath.com

Reference: source

%20(1).webp)

.webp)